SmartHome Chat Bot

Ever since I watched the movie "Minority Report" I dreamed of having a proper conversation with my home. Essentially, whenever I want it I can ask queries such as "What's the temperature?" or "Are all doors closed?". Furthermore, I can actively control my home with sentences such as "Set the temperature in the kitchen to 19°C!". A text layer is the most basic variant. It can be used together with a speech-to-text translation system (voice recognition) or via a text-based interface. Furthermore, it can also be used by other bots, allowing human-less computing to become reality.

In this article we'll explore the possibility of integrating such an intelligent text recognition system into a state of the art smart home system. For this task we'll use the Microsoft Bot Framework together with the Microsoft Cognitive Services, specifically the Language Understanding Intelligent Service (LUIS). Only a short introduction to these technologies will be provided. For a much more detailed introduction the article An Introduction to the Microsoft Bot Framework should be considered.

We will start with a short overview of the smart home solution we are gonna utilize. In the architecture section we outline the details of the solution's architecture followed by a quick introduction to the Microsoft Bot Framework and LUIS. Then we'll spent most of the time covering our model and the skill adapter. Finally, we'll go into details of providing a proper mock not only on the software side, but also via special hardware. For our purposes we use the very lightweight CC3200 from Texas instruments.

Background

Coming up with a secure, reliable, and well-performing smart home platform is difficult. If we add another requirement such as covering a broad variety of devices (interesting and connecting them) then we see that the task is almost impossible. Luckily, commercial systems exist. For this article we've chosen to use the RWE SmartHome system. This one is the market leader in Germany and is known for its secure platform. Furthermore, the devices and services cover almost every possible use case.

The RWE SmartHome product comes with a required central unit called SHC (short for SmartHome Controller). The SHC acts as a gateway for all integrated devices. This gateway connects all devices to each other plus the back end and performs the rule management to trigger actions when programmed conditions are met. Our bot service will talk to the SHC to read states or write commands. We will use the same interface that is usually chosen by standard (UI) clients.

Besides some external third party devices the smart home solution from RWE comes with a broad range of core devices. We use the radiator thermostats to have temperature and humidity sensors in our system. These sensors come with capabilities to provide us with information on their states, e.g., temperatures and relative humidity values. Furthermore, the radiator thermostat is more than just a sensor - it actually also contains an actor. Hence the thermostats can be used to change the room temperature as they are mounted to the heat radiators.

Additionally, we can use so-called window door sensors to detect if any windows or doors are still open. With the help of pluggable switches we can control any kind of lighting. Finally, motion detectors give us the ability to recognize movement. As we can see the possibilities seem to be never-ending. All we need to do is obtain and transform the information.

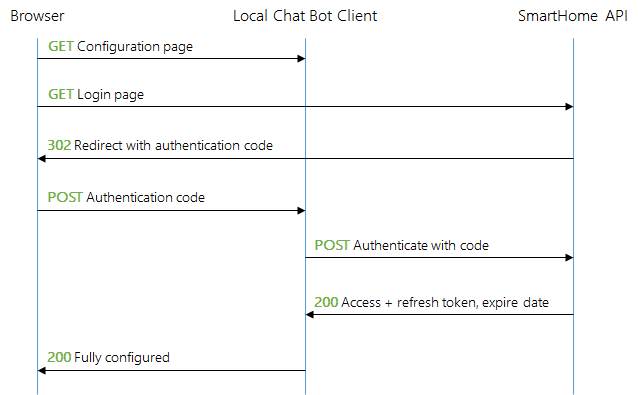

In order to talk to the gateway we need to communicate with the back end operated by RWE. Here standards like a RESTful web API or authentication via OAuth 2 are given. All we need is a registered client. The API also comes with a WebSocket channel to listen for events. However, in this state of the article we assume that only passive action (reply to a request) should be performed. No active action (inform about some change of state) will be taken.

The Solution

Now that we now the (real) smart home solution that is used by our bot we need to go through the steps of creating the smart home bot system. We start with the high-level architecture and work our way down to the implementation. In the following section we will then go into providing some other smart home solution for our bot - either in form of a software mock or in a very simple and rudimentary DIY hardware setup using a single TI CC3200 or some TI SensorTags that are connected via Bluetooth.

Architecture

Our architecture looks as follows. We start with a local chat bot client that runs on a Raspberry Pi. We use a Raspberry Pi as it is powerful enough to run Node.js without trouble. Furthermore, as a full Linux distribution is running in the Pi the solution can be extended with plenty of other stuff, e.g., monitoring general web resources, checking a local file server, or providing some multimedia streaming and information. Practically, the Raspberry Pi does not consume a lot of power, which makes it ideal for running continuously. We'll be using the Raspberry Pi 3, as it comes with integrated WiFi and Bluetooth. The latter will be used later in the article for connecting the TI SensorTags via Bluetooth Low Energy (BTLE).

The local chat bot client is connected to our central bot connector proxy via a WebSocket channel. The connector proxy is hosted in Microsoft Azure. It provides the callback to use from the real Microsoft Bot Connector and the channel registration for the local chat bot client. WebSockets offer the advantage of having a persistent communication channel, which allows us to send and retrieve information. As only the bot connector proxy is known with a DNS name we needed a way to open a connection between an unknown address and a known address.

There is yet another party we need to connect to. The local chat bot client is also connected to the smart home system. For the configuration of this part we host a small web server inside the Raspberry Pi. We'll go into its details in a minute.

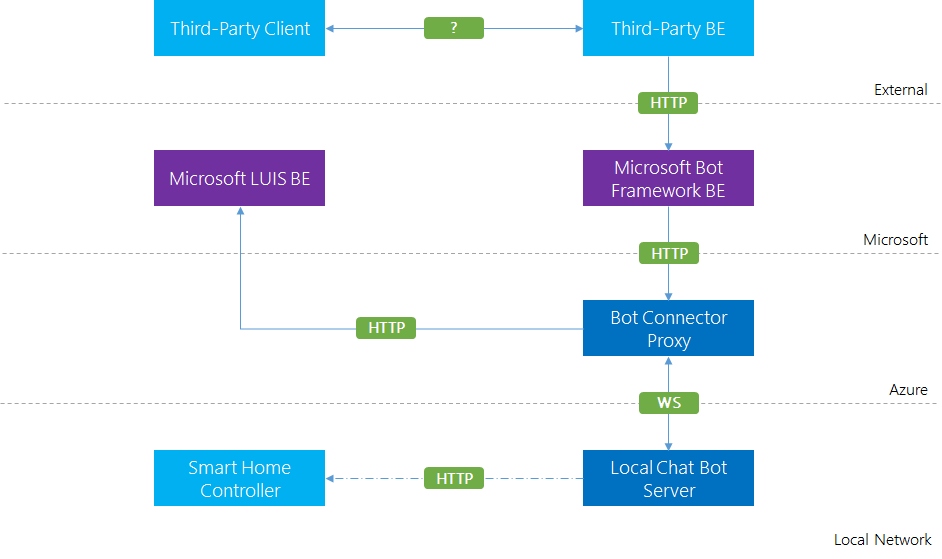

The high-level architecture diagram thus looks as follows. Purple components are supplied by Microsoft, light blue components are third-party. Our own components are filled with the darker blue.

Only the connection type between the client (e.g., Skype for Windows) and its associated back end (e.g., Skype) remains unknown. However, this one we also don't care about. The Microsoft Bot Framework is responsible for aggregating any kind of external system (currently, the list is limited, however more channels will be added in the future).

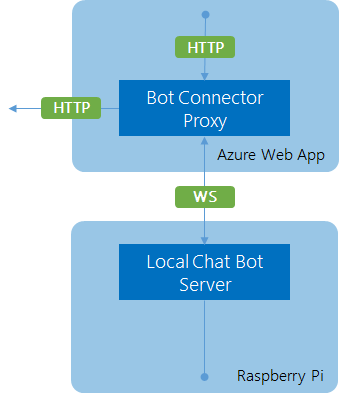

Thus in the end we only care about the following two components. The proxy offers an endpoint for the Bot Framework callbacks. Furthermore, it also offers another endpoint to establish WebSocket connections with local chat bot clients. Finally, it explicitly uses HTTP to run queries against LUIS, even though this could be (and will be) abstracted into an interface as well.

The local chat bot client on the other hand connects to the proxy via WebSocket and uses an internal interface to gather information about the home. The primary implementation of this interface is the connection to the RWE SmartHome solution, however, in this article we will also explore several other possibilities (and show the code for implementing these possibilities). We'll see that the provided architecture gives us a lot of flexibility.

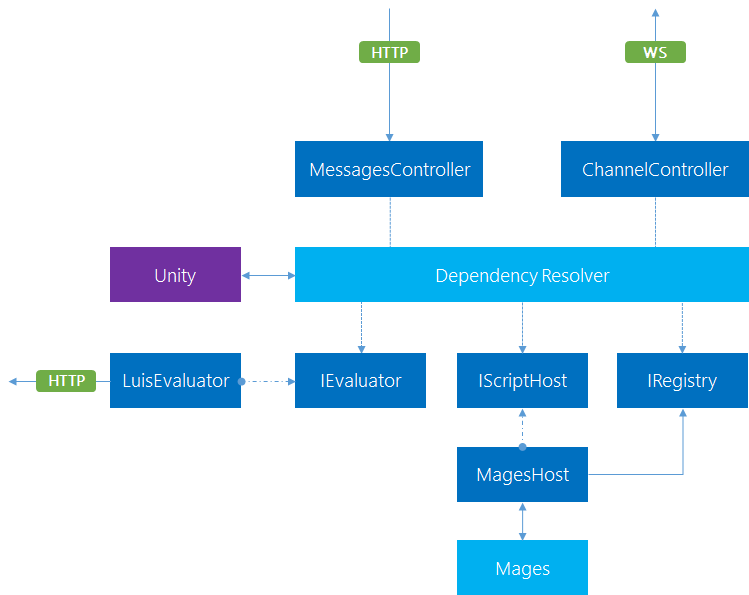

Digging deeper we find the architecture of the previously mentioned components. We'll start with the proxy. The proxy consists of two endpoints that are placed in their own controllers. The purpose of the messages controller is simply to act as a proper callback for the Microsoft Bot Connector. This means we need to receive the message and determine what do with it. Essentially, it all boils down to map the message from some user in some channel to a registered local chat bot client. Once this succeeds we can start the evaluation of the message using LUIS.

The diagram below shows that a dependency resolver is used to give us the service for finding the connection via the registry or perform the evaluation via LUIS.

One important thing in this architecture is the inclusion of a scripting engine, MAGES. Details about MAGES can be obtained by reading the article MAGES - Ultimate Scripting for .NET. We use MAGES to circumvent using yet another factory with an arbitrary number of arguments and multiple deserialization questions. Instead, the local chat bot client only needs to send a message that triggers running the right MAGES commands. It can be compared to allowing a foreign party to execute shell commands. However, the security risks are much less, as MAGES allows us to easily build a sandbox.

All in all the proxy should be quite lightweight by definition. Its only purpose is to forward the incoming messages to the corresponding bot connector. Furthermore, it already performs some intermediate tasks, such as evaluating the query to work with its transported intents and entitites.

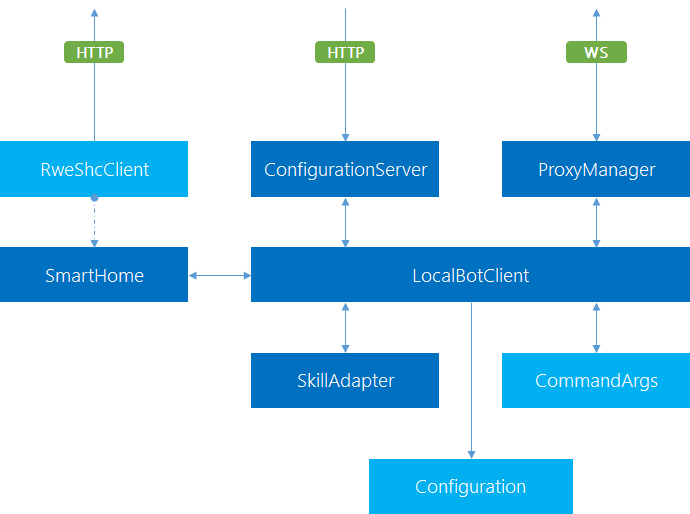

How does the architecture of the local chat bot client then look like? The picture below answers this question.

The application centers around the bot client. This part starts all components after first reading the configuration and taking the appropriate steps. Most importantly, all the used components are wired together indirectly via events. This loose coupling is important to test individual components without requiring the whole application. The most important flow is using the SmartHome component triggered by the ProxyManager in conjunction with the skill adapter. The skill adapter will be presented later on. In short, the skill adapter maps intents, e.g., get-temperature to the appropriate actions using the interface provided by the SmartHome component.

It was already mentioned that the local chat bot client is also running a webserver. This webserver serves only for quick information and configuration management. It has no other purpose.

To be quite flexible the system comes with a command line parser, such that the application can be started with different configurations or verbosity level.

Microsoft Bot Framework and LUIS

In the introduction we already outlined that this article won't give a detailed introduction to the Microsoft Bot Framework or LUIS. Nevertheless, as these two are the key components we should have a closer look at them.

Our proxy is written in C# using the ASP.NET Web API framework together with the Microsoft Bot Builder library in one of the most recent versions. The /api/messages endpoint reacts to POST requests by calling the following action. The body of the request will be serialized as an Activity instance.

public async Task<HttpResponseMessage> Post([FromBody]Activity activity)

{

if (activity.Type == ActivityTypes.Message)

{

/* ... see below ... */

}

else

{

SystemMessageHandlers.Handle(activity);

}

return Request.CreateResponse(HttpStatusCode.OK);

}Handling System Messages

The most important flow is obviously involving a standard message. System messages are handled generically. Trivially, we may solve them as follows. Typically, however, we should also include some user management that comes into play once conversations change (e.g., a new participant arrived, or an existing one left) or if users leave the chat. In this article we won't deal with conversation persistence and legacy entitites, i.e., we can safely ignore these system messages without anything wrong.

public static class SystemMessageHandlers

{

private static readonly Dictionary<String, Func<Activity, Activity>> handlers = new Dictionary<String, Func<Activity, Activity>>

{

{ ActivityTypes.DeleteUserData, activity => null },

{ ActivityTypes.ConversationUpdate, activity => null },

{ ActivityTypes.ContactRelationUpdate, activity => null },

{ ActivityTypes.Typing, activity => null },

{ ActivityTypes.Ping, activity => null },

};

public static Activity Handle(Activity message)

{

var handler = default(Func<Activity, Activity>);

if (handlers.TryGetValue(message.Type, out handler))

{

return handler.Invoke(message);

}

return null;

}

}Talking about user message it is time to have a closer look at the handling of standard messages. The following code was missing in the snippet above:

var serviceUrl = new Uri(activity.ServiceUrl);

var connector = new ConnectorClient(serviceUrl);

var connection = _registry.GetFor(activity.ChannelId, activity.From.Id);

if (connection != null)

{

await AskQuestionAsync(activity, serviceUrl, connection);

}

else

{

await TryRegisterAsync(activity, connector);

}Now this is getting interesting! We first try to find a registration for the given account. If we've been successful we can go ahead and ask the question. Otherwise, we'll try to perform the registration for this client. The registration flow is one of the tricky thing about our implementation.

There are multiple ways to register a provider to a local chat bot. We find:

- We can enter the providers (channel name + account id) directly in the configuration part of the local chat bot

- We can use the same user / password combination as used for the smart home connection

- We can use a one-time token that is generated on the local chat bot

We've decided to go for the latter. Why? The first option would potentially be the simplest, however, the account id may not be known (e.g., for skype this is a quite large digit number that I've never seen somewhere else). Hence this option is excluded. The second option seems to be really insecure. Consider typing in your user name and password in clear text (!) in a chat that is synced across all your devices (with persistent history!). Horrible... The third option is quite secure. We can only obtain the one-time token by accessing the (locally available) configuration website. The code is then visible in the conversation (insecure, yes), but as a new token is generated once an old one has been used this is not a big problem.

MAGES Integration

Finally, we need provide the option to revoke earlier given access. This will be done from the local chat bot. We already described that the local chat bot only sends a command-like message from to the proxy. These commands may only use the following functions. We remove any kind of global API. The binding and everything else is done in MAGES.

static class AdapterApi

{

public static void Register(String id, String shcSerial)

{

// ...

}

public static void Update(String id, String authCode)

{

// ...

}

public static void Link(String id, String provider, String account)

{

// ...

}

public static void Unlink(String id, String provider, String account)

{

// ...

}

public static void Answer(String id, String request, String answer)

{

// ...

}

}To complete this picture we show how the binding is actually instantiated. We'll see that no global functions are included (if any should be we would implement this explicitly via a whitelist; blacklists suffer from many security problems and should not be used). The AdapterApi class is used as a basis for functions - included directly without a namespace.

sealed class MagesScriptingHost : IScriptingHost

{

private readonly Engine _engine;

public MagesScriptingHost()

{

var scope = new Dictionary<String, Object>();

_engine = new Engine(new Configuration

{

IsEngineExposed = false,

IsEvalForbidden = true,

IsThisAvailable = true,

Scope = new ReadOnlyDictionary<String, Object>(scope)

});

_engine.Globals.Clear();

_engine.SetStatic(typeof(AdapterApi)).Scattered();

}

public void Execute(String command)

{

try { _engine.Interpret(command); }

catch (Exception ex) { Trace.TraceError(ex.Message); }

}

}If a command fails it may - in the worst case - destroy the WebSocket connection. We can assume that such a fatal command could only come from an external / uncontrolled local bot client. In such cases we can live with the interruption of the given WebSocket connection. However, we've decided to catch errors from all clients and let the WebSocket connection survive sending such fatal commands.

LUIS Evaluation

Coming back to the original topic we still need to cover when LUIS evaluations are processed. We've already spotted two kinds of paths that can be taken for incoming messages. We found AskQuestionAsync and TryRegisterAsync will take over. The latter has already been discussed. Now its time to shed some light on the former.

The AskQuestionAsync uses an instance of IEvaluator to perform the evaluation of the message. The result is then send to the connection that has been retrieved previously. In order to use LUIS for this evaluation the LuisEvaluator needs to be considered. The implementation is shown below.

public sealed class LuisEvaluator : IEvaluator

{

private static readonly String ApplicationId = WebConfigurationManager.AppSettings["LuisApplicationId"];

private static readonly String SubscriptionId = WebConfigurationManager.AppSettings["LuisSubscriptionId"];

public async Task<String> EvaluateAsync(String input)

{

var query = Uri.EscapeDataString(input);

using (var client = new HttpClient())

{

var uri = $"https://api.projectoxford.ai/luis/v1/application?id={ApplicationId}&subscription-key={SubscriptionId}&q={query}";

var msg = await client.GetAsync(uri);

if (msg.IsSuccessStatusCode)

{

return await msg.Content.ReadAsStringAsync();

}

}

return null;

}

}There are no efforts being taken to deserialize the JSON to an object. We are satisfied with the string representation as this one directly goes out to the local chat bot client waiting on the Raspberry Pi for some input.

The implementation of the AskQuestionAsync method also shows us one more interesting thing: As the communication is truly asynchronous (i.e., we don't use the async state machinery to hold an instance and come back later, but in fact we don't know if (and how) there is a response to our request) we need to provide one way to identify the kind of request for later usage. This is important is the request determines the channel of the answer.

The following code snippet contains all the important information. A new RequestInfo object is created to hold the information about the performed request. The request will be cached for a certain time (e.g., 5 minutes) before it will be removed from the cache. That means we don't get answers for queries that are older than 5 minutes - guaranteed!

private async Task AskQuestionAsync(Activity activity, Uri serviceUrl, ConnectionInfo connection)

{

var message = activity.Text ?? String.Empty;

var id = Guid.NewGuid();

var evaluation = await _evaluator.EvaluateAsync(message);

var request = new RequestInfo

{

User = activity.From,

Bot = activity.Recipient,

Conversation = activity.Conversation,

ServiceUrl = serviceUrl

};

await connection.SendRequestAsync(id, request, evaluation);

}At this point in time it makes sense to have a closer look at the LUIS project definition.

LUIS Project

The LUIS project can be viewed (i.e., exported) as a JSON file. This file contains all the information that represent our model. For the source code attached to this article it is sufficient to illustrate the most simple model consisting of a single intent with an optional entity. Of course, the general sentiment is still true; we need enough utterances (i.e., data points) to gain some accurancy in the identification provided by LUIS.

In the simplest case (a single intent for getting the temperature, with an optional location entity) we end up with a project definition containing a set of obvious utterances similar to the following one.

{

"luis_schema_version": "1.3.0",

"name": "SmartHomeChatBot",

"desc": "Language understanding for a smart home platform.",

"culture": "en-us",

"intents": [

{

"name": "None"

},

{

"name": "get-temperature"

}

],

"entities": [

{

"name": "location"

}

],

"composites": [],

"bing_entities": [

"temperature",

"number",

"datetime"

],

"actions": [],

"model_features": [],

"regex_features": [],

"utterances": [

{

"text": "get the temperature.",

"intent": "get-temperature",

"entities": []

},

{

"text": "get the temperature in the bathroom.",

"intent": "get-temperature",

"entities": [

{

"entity": "location",

"startPos": 5,

"endPos": 5

}

]

},

{

"text": "what's the temperature.",

"intent": "get-temperature",

"entities": []

},

{

"text": "temperature in the kitchen.",

"intent": "get-temperature",

"entities": [

{

"entity": "location",

"startPos": 3,

"endPos": 3

}

]

},

{

"text": "tell me the temperature in the living room.",

"intent": "get-temperature",

"entities": [

{

"entity": "location",

"startPos": 6,

"endPos": 7

}

]

},

{

"text": "show me the temperature.",

"intent": "get-temperature",

"entities": []

},

{

"text": "temperature in my office",

"intent": "get-temperature",

"entities": [

{

"entity": "location",

"startPos": 3,

"endPos": 3

}

]

},

{

"text": "what's your name",

"intent": "None",

"entities": []

},

{

"text": "this sentence is bogus",

"intent": "None",

"entities": []

},

{

"text": "no relevance to anything",

"intent": "None",

"entities": []

},

{

"text": "foobar",

"intent": "None",

"entities": []

},

{

"text": "how warm is it in the bathroom",

"intent": "get-temperature",

"entities": [

{

"entity": "location",

"startPos": 6,

"endPos": 6

}

]

},

{

"text": "ho",

"intent": "None",

"entities": []

},

{

"text": "give me the temperature",

"intent": "get-temperature",

"entities": []

},

{

"text": "what's the temperature in the kitchen?",

"intent": "get-temperature",

"entities": [

{

"entity": "location",

"startPos": 7,

"endPos": 7

}

]

},

{

"text": "temperature in the living room",

"intent": "get-temperature",

"entities": [

{

"entity": "location",

"startPos": 3,

"endPos": 4

}

]

},

{

"text": "sorry",

"intent": "None",

"entities": []

},

{

"text": "temperature at home?",

"intent": "get-temperature",

"entities": []

},

{

"text": "what's the temperature",

"intent": "get-temperature",

"entities": []

},

{

"text": "foo",

"intent": "None",

"entities": []

},

{

"text": "hi",

"intent": "None",

"entities": []

},

{

"text": "how hot is it ?",

"intent": "get-temperature",

"entities": []

},

{

"text": "tell me the temperature",

"intent": "get-temperature",

"entities": []

},

{

"text": "what's the temperature?",

"intent": "get-temperature",

"entities": []

}

]

}The general tendency is to increase the accuracy by providing more utterances. Nevertheless, as we increase the number of intents the utterances to keep to accuracy stable will increase exponentially. In practice this is not much of a problem, since the top hit (with whatever margin) can be - by definition - considered the right choice. Hence we should not care too much about keeping the accuracy stable, but rather if we end up with the correct results. Once we spot incorrect results in the LUIS evaluation sometime the future we should add more utterances to balance the system in our favor.

The number of entities is strongly dependent on the complexity of these intents. Usually, we want to use as many predefined entities as possible, however, even though the list of quite exhaustive we may not find the entity we are after. Entities are easier to learn and identify than intents and should be considered a second order problem.

We will now switch gears and look at the local chat client. We start with a closer look at the skill adapter.

Skills: Combining Intentions and Entities

Any kind of query that comes from the proxy to the client will be transported as the result of an evaluation with LUIS. Therefore, we'll use a special adapter to handle such requests. We call it the skill adapter. Essentially, it maps intents to JavaScript modules and calls the function exported from the module with the smart home adapter and the given entities.

The following code shows how the skill adapter looks like. It is a simple class that requires a smart home adapter to be created. The resolution of skills takes place in the resolve method. Here some magic happens. It boils down to look for JavaScript files in the skills subfolder that match the name of the given intent (this is our convention).

Most importantly, we only allow to use buffered modules if they are still up-to-date. Otherwise, we clear the cache and reload the corresponding module.

const fs = require('fs');

const util = require('util');

const helpers = require('./helpers.js');

const EventEmitter = require('events').EventEmitter;

class Skills extends EventEmitter {

constructor (shc) {

super();

this.table = { };

this.shc = shc;

}

resolve (id, intent, entities) {

const fn = __dirname + '/skills/' + intent + '.js';

if (!intent || !fs.existsSync(fn)) {

return this.emit('failure', id);

}

const stats = fs.statSync(fn);

const changed = new Date(util.inspect(stats.mtime));

if (!this.table[intent] || changed > this.table[intent].changed) {

this.table[intent] = {

changed: changed,

execute: helpers.getModule(fn)

};

}

this.table[intent].execute(this.shc, entities).then((message) => {

this.emit('success', id, message);

}).catch((err) => {

return this.emit('failure', id);

});

};

};

module.exports = Skills;We use the EventEmitter to utilize the standardized way of emitting events (without much effort from our side). The question is now: How can such a skill look like?

In the following snippet we see the definition of the get-temperature skill. We could write it a little bit shorter (or, of course, much longer), but the given version shows the most important features. We use the given smart home adapter instance to retrieve all temperature states (asynchronously). Once the values are known we need to see if any specific rooms have been demanded. If so, we filter the result set.

For production-ready code we may want to increase the robustness by matching the rooms against the list of known locations (and performing similarity checks, if a given room does not match any entry in the list). Here we simply record no match in such cases.

const Promise = require('promise');

module.exports = function (shc, entities) {

return new Promise((resolve, reject) => {

shc.getAllStates('Temperature').then(function (temperatures) {

const sentences = [];

if (entities.length > 0) {

const original = temperatures;

temperatures = [];

entities.forEach(function (entity) {

if (entity.type === 'location') {

const location = entity.name.toUpperCase();

temperatures = temperatures.concat(original.filter(function (temperature) {

return temperature.location && temperature.location.toUpperCase() === location;

}));

}

});

} else {

const sum = temperatures.reduce((c, nxt) => c + nxt.state.value, 0);

sentences.push('The average temperature is ' + (sum / temperatures.length) + '°C.');

}

const values = temperatures.map(function (temperature) {

return [temperature.state.value, '°C in ', temperature.location, ' (', temperature.device.name, ')'].join('');

});

if (values.length > 0) {

const message = values.join(', ');

sentences.push('The temperature is ' + message + '.');

}

if (sentences.length === 0) {

sentences.push('No temperature sensor readings found.');

}

return resolve(sentences.join(' '));

}).catch(function (err) {

return reject(err);

});

});

};So we either return the full set of all temperatures including a little evaluation (here we used the average of all temperatures), or only answer to the rooms that have been identified as entities.

The best thing about skills is that they can be extended (or improved) during runtime. There is no fixed binding known when starting the application. The system may evolve as more intents are modeled. This is great for prototyping or for an update process that is to be defined later.

Configuration Management

In order to make development simple a configuration management system has been established that is both, simple and flexible. The main idea is to aggregate all the important settings in one JSON file and specialize some of these settings in another file. While the first file is always loaded, the latter is only loaded upon request (i.e., if a file with the given name exists). Settings in the special file override (or extend) the settings in primary file.

The specialization is called environment. The generic settings file is thus the global file, the specialized file is the environment (or local) file. The environment file follows the naming convention that its file name without extension ends with .environmentname, where environmentname represents the chosen environment name. For development two specializations have been used: debug and release (reasonable and familiar choices).

The following images illustrates this way of doing configuration management.

Therefore, we can use the following global file:

{

"adapter": {

"host": "smarthomebot.azurewebsites.net"

},

"webserver": {

"port": 3000,

"assets": "assets",

"views": "views"

},

"smarthome": { }

}in conjunction with, e.g., the specialization for the debug environment:

{

"adapter": {

"host": "localhost:3979"

}

}The idea is clear: Instead of connecting to the production proxy (deployed in a Microsoft Azure data center) we connect to the local running version (used mainly for debugging purposes). Similarly, we could override other settings, however, these seem to be less coupled to the debug / release question.

Another thing that has been mentioned is the user configuration. A local chat bot client will be mapped to a single user by definition. This user, however, may have different accounts (in different channels, e.g., Skype, Slack, ...) registered. The userData file is bound to an environment, as different environments may use different connection URLs and smart home credentials. Therefore, it makes sense to specialize the user configuration with the given environment.

There is another important difference between the (hierarchical) configuration and user data. While the configuration is just read and never modified (from the application), the user data is usually populated exclusively by the application. Thus, this is the file that is used by the application to store persistent information about its user. This information is used on a restart of the application to continue with the previous state. This file will also store the refresh token (see OAuth 2 flow) required to get an access token for our smart home system.

Storing the refresh token (unencrypted) is a security risk. For a client that will be deployed the refresh token should never be stored in an unencrypted fashion.

All in all most of the configuration is then done while the server is actually running via the web browser. Here the following wrapper is of the essence. Generally, we still want to have the loose coupling between the rest of the application and the web server. Hence we fill the pass the model around via the events. The routes, however, are part of this abstraction layer.

The following code shows the WebServer class used. For rendering the JADE view engine is used.

const express = require('express');

const bodyParser = require('body-parser');

const EventEmitter = require('events').EventEmitter;

class WebServer extends EventEmitter {

constructor (config) {

super();

const app = express();

app.use(express.static(__dirname + '/../' + config.assets));

app.use(bodyParser.urlencoded({ extended: true }));

app.set('views', __dirname + '/../' + config.views);

app.set('view engine', 'jade');

app.get('/', (req, res) => {

const model = { };

this.emit('index', model);

res.render('index', model);

});

app.post('/revoke', (req, res) => {

const data = req.body;

const model = { data: data };

this.emit('revoke', model);

res.redirect('/');

});

app.post('/setup', (req, res) => {

const data = req.body;

const model = { data: data };

this.emit('setup', model);

res.render('setup', model);

});

app.listen(config.port, () => {

console.log('Webserver started at port %s!', config.port);

});

}

};

module.exports = WebServer;This concludes the configuration management in our chat bot.

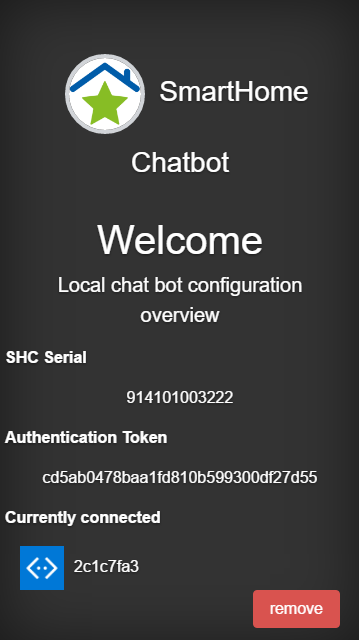

The screenshot above also shows the one-time token displayed in the configuration overview.

Using the code

The code as described so far should be usable from the beginning. However, as mentioned one or the other time we may need to take another provider for the smart home. The solution therefore does not come with the provider for the RWE SmartHome solution. Instead, the sources provide a generic software provider and two hardware providers that can be used.

The decision which provider to take is up to the reader. We designed the system such that it can be supplied via the configuration. This means that it is also fairly easy to write a custom provider, which may then work with an existing home automation tracker (or any other kind of tool).

We will now go into all the topics to make the provided solution work.

Bot Connector Proxy Setup

The sources for the bot connector proxy are delivered as a ASP.NET Web API project. To deploy or test the given sources we need to enter some required parameters in the web.config. The file originally looks as follows:

<configuration>

<appSettings>

<add key="BotId" value="" />

<add key="MicrosoftAppId" value="" />

<add key="MicrosoftAppPassword" value="" />

<add key="LuisApplicationId" value="" />

<add key="LuisSubscriptionId" value="" />

</appSettings>

<!-- ... -->

</configuration>The five fields need to be filled out for deployment. Otherwise, if we are only interested in playing around with the solution, we need to provide at least the values for the LuisApplicationId and LuisSubscriptionId keys. If we want to play around with the solution without LUIS integration we can do that by changing the registered services in the container used by the dependency resolver.

So please note that the currently added LuisEvaluator is actually using these keys. Without them the solution won't work.

The Software Mock

Any smart home adapter needs to fulfill the following basic interface:

interface SmartHomeAdapter {

refresh(code: string): Promise;

login(code: string): Promise;

getAllStates(name: string): CapabilityState[];

getDevice(capabilityId: string): Device;

getLocations(device: Device): string[];

}We won't go into the details of the custom types mentioned, but their purpose should be visible solely from their name. Our software mock should be simple enough to be also used for, e.g., unit testing (or any kind of integration testing), but also flexible enough to cover scenarios involving other hardware (making their integration simpler). Hence the software mock is a kind of prerequisite for the upcoming gadgets.

Normally, the smarthome section (for the RWE SmartHome system) looks similar to the following snippet:

"smarthome": {

"type": "rwe",

"baseUrl": "/* API URL */",

"clientId": "/* id */",

"clientSecret": "/* secret */",

"redirectUrl": "/* Redirect URL */"

}The type is important. It marks the actual adapter to use. For our software mock we can choose virtual. In the simplest scenario the virtual adapter looks as follows:

const Promise = require('promise');

class SmartHomeClient {

constructor (config) {

this.loggedIn = false;

}

getAllLocations () {

return Promise.resolve({

// ...

});

}

getAllDevices () {

return Promise.resolve({

// ...

});

}

getAllCapabilities () {

return Promise.resolve({

// ...

});

}

getAllCapabilityStates () {

return Promise.resolve({

// ...

});

}

authorizeWithRefresh (refreshToken) {

this.loggedIn = true;

return Promise.resolve({

refresh: refreshToken,

expires: 172800,

shc: { serialnumber: '123456789' }

});

}

authorize (code) {

return authorizeWithRefresh(code);

}

}

module.exports = SmartHomeClient;Now we want to add some meat to make it more pleasing to work with. We can use the configuration to transport predefined sets of devices, locations, and capabilities. Furthermore, we may also transport the name of a module to load for retrieving data. This way, we can use the virtual adapter not only as a mock, but also for real commuication, e.g., with files written on the local system. This makes the integration of hardware as described in the following sections possible.

Most of the flexibility comes from a function that is quite simple in nature. This function is building a resolver, either by already receiving one or by building one from the entitites / given function passed as a string.

function buildResolver (resolver, entities) {

if (typeof resolver === 'string') {

resolver = eval(resolver);

} else if (resolver && typeof resolver !== 'function') {

entities = resolver;

resolver = undefined;

}

if (!resolver) {

if (entities) {

resolver = () => entities;

} else {

resolver = () => [];

}

}

return resolver;

}The function above is used in the constructor. For example, for resolving the devices the function could be used as follows.

constructor (config) {

/* ... */

this.deviceResolver = buildResolver(config.deviceResolver, config.devices);

}By default nothing is written in the configuration. Therefore, we do not expect to receive anything anywhere. But this not a problem. In order to get dynamic function definitions on speed we also introduce some helpful functions to be available in the same context.

function readLinesFromFile (fn) {

const data = fs.readFileSync(fn, 'utf8');

return data.trim().split('\n');

}

function readLastLineFromFile (fn) {

const lines = readLinesFromFile(fn);

return lines.slice(-1)[0];

}

function readFileAsJson (fn) {

const data = fs.readFileSync(fn, 'utf8');

return JSON.parse(data);

}With these helpers in place we can basically reach everything in no time through the virtual adapter.

Single CC3200 Instance

The software mock is a nice (and easily controllable) possibility to play around with the smart home chat bot solution. However, if we want to make use of the smart home chat bot we need to connect it to real sensors. Even though the best solution would be to use a system as the one described previously (RWE SmartHome), we may not want to spend a lot of money on such a system. Maybe there is no good system on the local market (RWE SmartHome is currently only offered in central europe) or the available solutions do not provide any kind of API. In such scenarios we may want use a lightweight solution as a substitute.

The CC3200 is a powerful micro controller unit (MCU) that is also available on a SDK board. This board is called the CC3200-LaunchXL. To simplify development the board already comes with some possibilities for extensions and a few integrated sensors. One of these sensors is a temperature sensor. Ideal for our case!

In the following we go through the necessary steps to set up a CC3200 development board and program it with sensor readings and periodic deliveries to a local server (where the local chat bot is running).

Requirements

To use a CC3200 for obtaining sensor data (e.g., with its in-built temperature sensor, or any other externally connected sensor) as described in this tutorial we need the following setup:

- The TI CC3200-LaunchXL board

- One USB micro cable

- Installed Energia IDE

- Internet access via WiFi for the CC3200

We can use the editor provided by Energia or any other editor, e.g., Sublime Text, Emacs, or vim.

Connecting the LaunchPad

The easiest way to get it on the LaunchPad is to press CTRL + M in Energia. This will compile the binaries, upload the code, open the serial monitor, and run the application. However, by design the LaunchPad does not offer a very programmer friendly method for a quick build - test - debug loop. It is required to set different jumpers to go from run-mode to program-mode and back again.

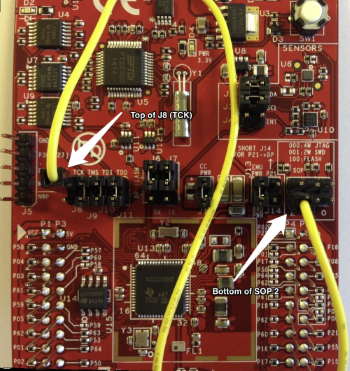

The following image shows a neat workaround using the J8 (TOP) connector together with the SOP2 (BOTTOM).

Here are the detailed step-by-step instructions:

- We start by removing both jumpers J8 and SOP2 (if installed).

- With the USB connector facing up, we connect one side of the jumper wire to the top of J8 and the other side to the bottom of SOP2.

- Now when we run the code from Energia via CTRL + M we will see the serial monitor popping up (be sure to have the correct baud rate configured!) and the LEDs start blinking.

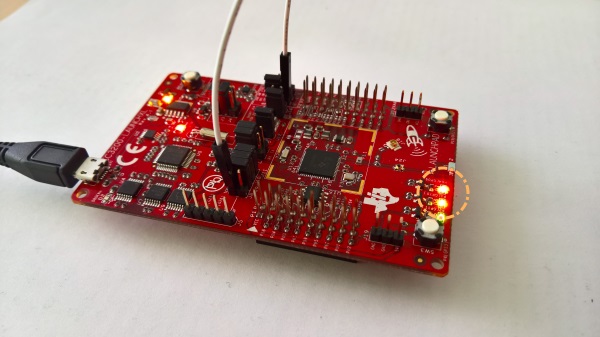

At this point in time our setup looks similar to the next image.

So far so good. The next step is to get a little bit more interactive. We could either use one of the buttons,

PUSH1(3)PUSH2(11)

or some information from an integrated sensor. For the buttons we would use digitalRead (see reference) to get the state (HIGH or LOW) and constantly monitor the current state via polling. Buttons are boring so let's go for the sensor input right away.

Sending HTTP Requests

Until this point we already archived quite a lot. We started with a simple kind of "Hello World" and reached a stage where we are able to gather sensor information and trigger outputs based on the collected information. This is the original purpose of micro controllers - read hardwired sensors, process the input and control hardwired actuators accordingly. Adding wireless connectivity, especially to the world wide web, opens a whole new universe of possibilities and enables IoT applications. Now we want to take this critical step.

We can use httpbin.org to make a request to its API, e.g., to get a single integer. The resulting number will then be displayed using a blinking LED. A five-second pause (LED off) is inserted after each HTTP call.

Before we can start with an HTTP call we need to establish a network connection. Luckily, the CC3200 LaunchPad comes with a WiFi chip on-board.

Similar to the integration of the accelerometer we put the code in a new file wifi.cpp. The header is quite simple. Here we only declare a single function.

#pragma once

void connectWifi(char* ssid, char* password);The source file contains quite a number of print statements for debugging, which is useful to identify what's going on in the setup process. To make it fully work we use the WiFi library that comes with Energia.

#include "wifi.h"

#include <Energia.h>

#include <WiFi.h>

void connectWifi(char* ssid, char* password) {

Serial.print("Connecting to WIFI network ");

Serial.print(ssid);

WiFi.begin(ssid, password);

while (WiFi.status() != WL_CONNECTED) {

Serial.print(".");

delay(300);

}

Serial.println(" connected!");

Serial.print("Waiting for an IP address ");

while (WiFi.localIP() == INADDR_NONE) {

Serial.print(".");

delay(300);

}

Serial.println(" received!");

}The steps included here are simple. We start by connecting to the WiFi network. We then wait until the connection is established. Finally, we also wait until the router assigned us some IP. At this point in time the WiFi connection is established and ready to be used.

How can we now make an HTTP request? Well, it turns out that this is not such an easy task! In the beginning our API is as simple as the following declaration:

bool httpGetRequest(char* hostname, char* path);We just pass in a hostname, e.g., httpbin.org, and a path. In our case we choose /bytes/4 to obtain 4 random bytes. The source code for the handling function looks close to the code shown below. Many of the used functions come form the SimpleLink library and you can look them up in the excellent documentation.

#include <Energia.h>

#include <WiFi.h>

bool httpGetRequest(char* host, char* path) {

String hostname = String(host);

String head_post = "GET " + String(path) + " HTTP/1.1";

String head_host = "Host: " + hostname;

String request = head_post + "\n" +

head_host + "\n\n";

char receive_msg_buffer[1024];

uint32_t host_ip;

bool success = false;

SlTimeval_t timeout { .tv_sec = 45, .tv_usec = 0 };

if (sl_NetAppDnsGetHostByName((signed char*)hostname.c_str(), hostname.length(), &host_ip, SL_AF_INET)) {

return false;

}

SlSockAddrIn_t socket_address {

.sin_family = SL_AF_INET, .sin_port = sl_Htons(80), .sin_addr = { .s_addr = sl_Htonl(host_ip) }

};

uint16_t socket_handle = sl_Socket(SL_AF_INET, SL_SOCK_STREAM, IPPROTO_TCP);

if (sl_SetSockOpt(socket_handle, SL_SOL_SOCKET, SL_SO_RCVTIMEO, (const void*)&timeout, sizeof(timeout)) >= 0 &&

sl_Connect(socket_handle, (SlSockAddr_t*)&socket_address, sizeof(SlSockAddrIn_t)) >= 0 &&

sl_Send(socket_handle, request.c_str(), request.length(), 0) >= 0 &&

sl_Recv(socket_handle, receive_msg_buffer, sizeof(receive_msg_buffer), 0) >= 0) {

Serial.println(receive_msg_buffer);

success = true;

}

sl_Close(socket_handle);

return success;

}First we define the request message to send. Note that the double newline at the end is crucial; it is indicating that the header ends here. Otherwise we'll receive a timeout as the server waits for more. Then we retrieve the IP address of the host to connect to. Then we set up the connection socket as TCP/IP on port 80 (standard HTTP connection). The steps to follow are then described as

- setting socket options; in this case we only set the timeout to 45s,

- connecting to the socket using the previously defined options

- sending the content: we transmit the request, which is given by the headers only, and finally

- receiving the answer.

The response will be available as a single string - there is no distinction between headers and content body by default. We need to do the parsing.

Once we compile and run this code we should see something similar to the following:

HTTP/1.1 200 OK

Server: nginx

Date: Sat, 18 Jun 2016 07:24:31 GMT

Content-Type: application/octet-stream

Content-Length: 4

Connection: keep-alive

Access-Control-Allow-Origin: *

Access-Control-Allow-Credentials: true

L8=rIf we did not receive anything, we should do some debugging to find the origin of the problem. The last characters are the string representation of the 4 random bytes we are interested in. Note that a random binary byte does not necessarily correspond to a printable ASCII symbol.

Debugging the LaunchPad

Debugging software on embedded systems is difficult. Most of the tooling and techniques we've learned to love are not available. Essentially, we are back to the stone age of programming. We've already seen that the Serial class represents a useful utility to gain some knowledge about what's actually going on.

The CC3200 LaunchPad has a JTAG (4 wire) and SWD (2 wire) interface for development and debugging. In this tutorial we will not go to the hardware level. Instead, we'll use the serial interface provided by the FTDI connector via USB. We've already seen that writing messages to the serial interface is an option, however, an even better option is to install the Code Composer Studio from Texas Instruments and use breakpoints. This brings back one of the most useful and efficient debugging methods available in everyday programming.

Once we've downloaded and installed the Code Composer Studio we can set it up to connect to the right COM port with the configured bandwidth. It is important to configure CCS to point to the Energia installation path. Otherwise, required libraries and files may not be found. Finally, we may open Energia projects directly from CCS.

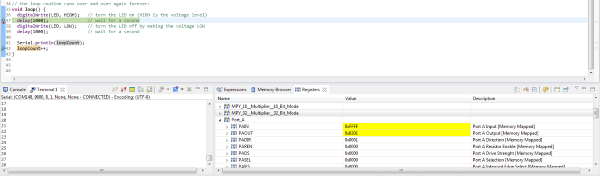

Another cool option of CCS is the ability to view the currently used registers and memory directly. This is shown below.

This way we can accelerate the search for possible errors in our code. Assuming that the HTTP request now runs smoothly we can come back to solve our original problem: Making a secure HTTP request from the CC3200.

Reading the Accelerometer

The board comes with some sensors. There is the temperature sensor (called tmp006), which is available at the address 0x41, and an accelerometer (bma222) at 0x18. In this sample we will use the accelerometer as it is far easier to use for demo purposes (the latency and accuracy for manipulating temperatures are quite difficult to manage).

Warning As there is an overlap in the address register we can't use the yellow and green LED together with the accelerometer (remember that almost all pins are multiplexed). Consequently, we will only use the red LED from now on.

To read out the accelerometer sensor we include the header Wire.h. Also we should put the code we write to read from the accelerometer in a new file. We will call it accelerometer.cpp with the header accelerometer.h. Initially, our code looks as follows:

#include <Wire.h>

#include "accelerometer.h"

void setup() {

Serial.begin(9600);

Serial.println("Entering setup!");

Wire.begin();

pinMode(RED_LED, OUTPUT);

}

void loop() {

Serial.println("Next loop iteration!");

AccData acc = readAccelerometer();

Serial.print(acc.x);

Serial.print(", ");

Serial.print(acc.y);

Serial.print(", ");

Serial.println(acc.z);

digitalWrite(RED_LED, HIGH);

delay(1000);

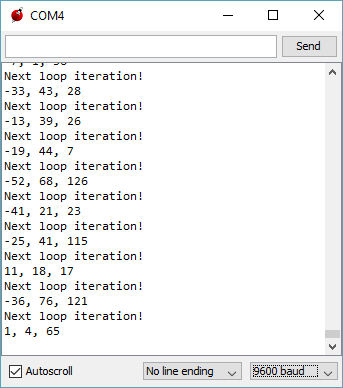

}The readAccelerometer function is declared in our new header file. In the setup function we need to initialize the Wire library. In each iteration we read the accelerometer and print the values to the screen. Running this in Energia results in the following output.

The AccData structure is defined in our header. The code here looks as follows.

#pragma once

#include <stdint.h>

struct AccData {

int8_t x;

int8_t y;

int8_t z;

};

AccData readAccelerometer();Quite straightforward. The source file is much more interesting.

#include "accelerometer.h"

// ...

int8_t readSingleAxis(uint8_t axis);

AccData readAccelerometer() {

AccData data;

data.x = readSingleAxis(0x03);

data.y = readSingleAxis(0x05);

data.z = readSingleAxis(0x07);

return data;

}We read the registers for each of the components of the three dimensional acceleration vector and return the full result. The question is now how the readSingleAxis for reading a single component is defined. The rest is displayed below.

#include <Energia.h>

#include <Wire.h>

void initializeI2C(uint8_t base_address, uint8_t register_address) {

Wire.beginTransmission(base_address);

Wire.write(register_address);

Wire.endTransmission();

}

uint8_t readI2C(uint8_t base_address, uint8_t register_address) {

initializeI2C(base_address, register_address);

Wire.requestFrom(base_address, 1);

while (Wire.available() < 1);

return Wire.read();

}

int8_t readSingleAxis(uint8_t axis) {

return readI2C(0x18, axis);

}Each read operation starts an I2C connection to the device at the given address. We remember that the address for the accelerometer was 0x18. We then simply follow the standard I2C communication protocol implemented with help of the Wire library that abstracts away the low level stuff for us.

Having written this part we can adjust the code in the loop function to show the red light in case of a "falling" board. From our elementary physics course in school we remember that a free falling body is essentially force free, i.e., the acceleration in z direction will be zero (as compared to 1 in units of g for a body standing on the earth's surface). We assume that the z axis of the accelerometer is actually pointing "upwards".

As we measure a value of approximately 65 in z-direction by default we may normalize to this value. We should show a red light for values below 0.4 g, i.e., when the value drops below 26. Our modified code looks as given below.

#include <Wire.h>

#include "accelerometer.h"

void setup() {

Serial.begin(9600);

Wire.begin();

pinMode(RED_LED, OUTPUT);

}

void loop() {

AccData acc = readAccelerometer();

Serial.println(acc.z);

if (acc.z > 26) {

digitalWrite(RED_LED, LOW);

} else {

digitalWrite(RED_LED, HIGH);

}

}Potentially, we want the warning to stay active for some minimum duration, say one second. In this case we may modify the code to look as follows.

if (acc.z > 26) {

digitalWrite(RED_LED, LOW);

} else {

digitalWrite(RED_LED, HIGH);

delay(1000);

}Now, once triggered, the red LED will light up for at least 1 second.

Reading and Sending the Temperature

We can use a simple Node.js express server (e.g., listening on port 3000) to receive and store the data. The code can be as simple as follows.

const express = require('express');

const app = express();

app.use(function (req, res, next) {

req.rawBody = '';

req.setEncoding('utf8');

req.on('data', function (chunk) {

req.rawBody += chunk;

});

req.on('end', function () {

next();

});

});

app.post('/temperature', function (req, res) {

console.log(req.rawBody);

// Do something with the raw value!

res.send('');

});

app.listen(3000);With our previously described helpers in place we can write code for the CC3200 that is minimal and quite functional.

The following code connects to the local WiFi, wakes up the temperature sensor, and performs a continuous read from the sensor with a gap of 2 seconds (for illustration purposes).

Adafruit_TMP006 tmp006(0x41);

void setup() {

Serial.begin(9600);

connectWifi(WLAN_PUB_SSID, WLAN_KEY_CODE);

tmp006.begin();

tmp006.wake();

setCurrentTime();

}

void loop() {

char receive_msg_buffer[1024];

char send_msg_buffer[128];

while (true) {

delay(2000);

float value = tmp006.readObjTempC();

sprintf(send_msg_buffer, "%lf", value);

Serial.println(send_msg_buffer);

httpPostRequest(TMP_SNSR_HOST, TMP_SNSR_PORT, TMP_SNSR_PATH, send_msg_buffer, receive_msg_buffer);

}

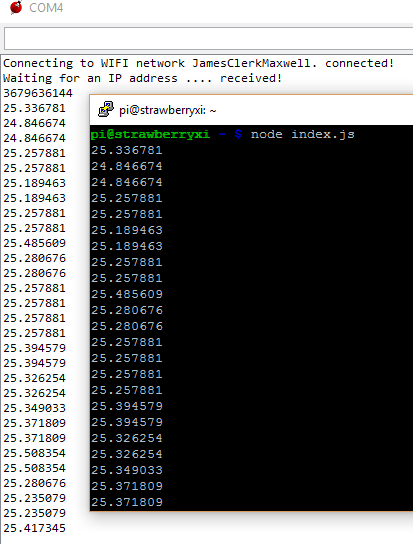

}In the end if we follow all these steps the temperature is successfully read and transported. Let's check this using the debugger.

Multiple Sensor Tags

If a single CC3200 is not sufficient we can also include multiple temperature sensors that are connected via Bluetooth Low Energy. This may even be simpler than using the CC3200, which involves low level hardware details to read out the sensor data and performing an HTTP request on a minimal kind-of operating system.

In the following we will explain how the sensor tag(s) may be used to retrieve temperature readings via BTLE.

Requirements

In order to fully include this way in our setup we need the following additional things:

- Active Bluetooth on the Raspberry Pi

- At least one TI SensorTag

We can use an editor installed on the Raspberry Pi 3, e.g., nano, ed, or vim. The tutorial uses the *Raspbian Jessie* image on the Raspberry Pi 3. This one should come with the Bluetooth drivers, otherwise try executing the following command:

sudo apt-get install pi-bluetoothWe start by setting up the hardware environment. The software should be all pre-configured, especially if we choose to take one of the available images.

Playing with Bluetooth from the Command Line

We connect to the Raspberry Pi with our favorite SSH client (e.g., Putty in case of Windows). In the shell we can now play around with the TI SensorTag using the following instructions:

- Run the standard Bluetooth program (should come with the OS) by typing

bluetoothctl. - Turn on the Bluetooth, if not already on, by typing

power on. Similarly, the power can be turned off withpower off. - Use the

devicescommand to list the paired devices. - Enter the device discovery mode with the

scan oncommand. After some time the sensor tag should appear (let's say with the MAC address 34:B1:F7:D4:F2:CF). - Enter

pair 34:B1:F7:D4:F2:CFto create the pairing between the Pi and the sensor tag. - Now we can stop discovering devices with

scan off. - Exit the program by typing

quit.

This way we discovered and paired our device. Now we can use the gatttool to play around with values.

- Run the program by typing

gatttool -b 34:B1:F7:D4:F2:CF --interactive. We enter an interactive session. - The first command we issue is

connect. We should see a "Connection successful" message. - Now we can try to read from the sensor tag:

char-read-hnd 0x25uses the handle 0x25 to read data from the thermometer. We should see some zeros. - To read some values we need to turn on the thermometer. We issue

char-write-cmd 0x29 01to turn the thermometer sensor at 0x29 on. - Issuing the command

char-read-hnd 0x25again should now yield a non-zero value. - Exit the program by typing

quit.

Handles

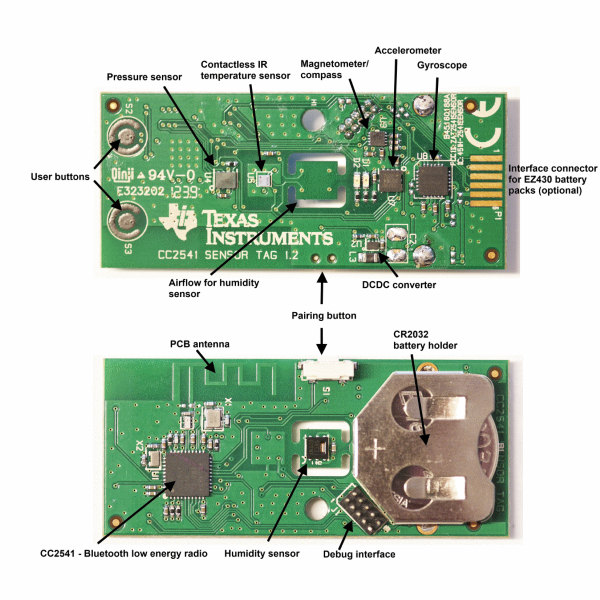

The sensor tag comes with a lot of different sensors. This includes:

- Contactless IR temperature sensor (Texas Instruments TMP006)

- Humidity Sensor (Sensirion SHT21)

- Gyroscope (Invensense IMU-3000)

- Accelerometer (Kionix KXTJ9)

- Magnetometer (Freescale MAG3110)

- Barometric pressure sensor (Epcos T5400)

- On-chip temperature sensor (Built into the CC2541)

- Battery/voltage sensor (Built into the CC2541)

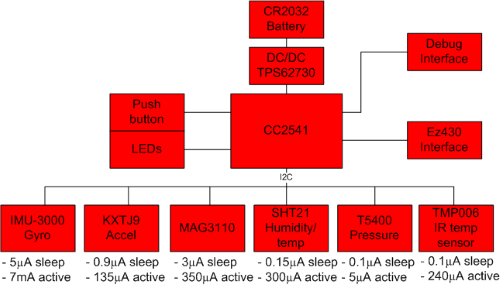

Turning the different sensors on or off has an impact on the power consumption. The following image illustrates the different power requirements.

The following table gives a short overview on some of the previously mentioned sensors.

| Sensor | Read | Length | Configure | Data |

|---|---|---|---|---|

| IR Temperature | 0x25 | 4 bytes | 0x29 | 0x26 |

| Accelerometer | 0x2d | 3 bytes | 0x31 | 0x2e |

| Humidity | 0x38 | 4 bytes | 0x3c | 0x39 |

| Magnetometer | 0x40 | 6 bytes | 0x44 | 0x41 |

| Barometric Pressure | 0x4b | 4 bytes | 0x4f | 0x4c |

| Gyroscope | 0x57 | 6 bytes | 0x5b | 0x58 |

The barometric pressure sensor also requires an extra calibration. The calibration has to be done before the first measurement. The following steps are required:

- We issue the command

char-write-cmd 0x4f 02. This performs the calibration. - The device is now read via

char-read-hnd 0x52yielding the raw value.

Overall, the following image shows a nice map of the sensor components placed over the sensor tag board.

This is it essentially. We can now write a simple bash script that just queries the sensor every once in a while and writes the result to a text file. This is the same text file structure as introduced in the software mock.

For instance, the following script pairs all devices given in the file devices.

#!/bin/bash

bluetoothctl <<< "power on"

sleep 1s

bluetoothctl <<< "scan on"

sleep 5s

while IFS='' read -r line || [[ -n "$line" ]]; do

bluetoothctl <<< "pair ${line}"

done < devices

sleep 4s

while IFS='' read -r line || [[ -n "$line" ]]; do

bluetoothctl <<< "disconnect ${line}"

done < devicesWe can use the same file to read temperature sensor values from these devices. The following bash script does this.

#!/bin/bash

while IFS='' read -r line || [[ -n "$line" ]]; do

gatttool -b ${line} --char-write -a 0x29 -n 01

gatttool -b ${line} --char-read -a 0x25

done < devicesFrom this point on its easy to just keep polling the device (e.g., every five minutes) to gain some information about the temperature.

Bonus: Speech To Bot

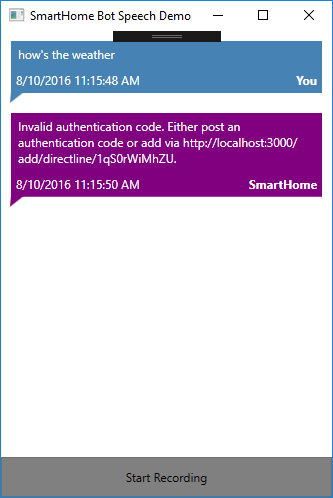

Once we have the generic text layer established we are free to basically do anything. One of the most interesting possibilities is to place a speech-to-text layer on top of our design. Some clients (e.g., Skype on most mobile platforms) integrate such a layer for free (usually, this is part of the input text box / soft keyboard from the underlying operating system). However, due to some reasons we may want to supply a special kind of application that provides the possibility of voice recognition as input.

Using Direct Line

The first question we need to answer is how to integrate such a client with our chat bot system. The answer is easy: via a direct line. Besides integrating channels such as Skype, Telegram, or Facebook, we can also integrate a completely unknown channel. This channel can work against a known API (and documented API), however, in the simplest case we just the Microsoft.Bot.Connector.DirectLine package from NuGet.

The library allows us to write the following code. This is everything to get our own chat client up to speed!

sealed class MessageChannel : IDisposable

{

private static readonly String BingSecret = ConfigurationManager.AppSettings["BingSecret"];

private static readonly String EndPoint = ConfigurationManager.AppSettings["SmartBotEndpoint"];

private static readonly Uri DirectLine = new Uri("https://directline.botframework.com");

private readonly Conversation _conversation;

private readonly DirectLineClient _client;

public event EventHandler<MessageEvent> Received;

public MessageChannel()

{

var credentials = new DirectLineClientCredentials(BingSecret, EndPoint);

_client = new DirectLineClient(DirectLine, credentials);

_conversation = _client.Conversations.NewConversation();

}

public Task SendAsync(String content)

{

var message = new Message(text: content);

return _client.Conversations.PostMessageAsync(_conversation.ConversationId, message);

}

public async Task ReceiveAsync(CancellationToken cancellationToken)

{

var watermark = default(String);

while (!cancellationToken.IsCancellationRequested)

{

var messages = await _client.Conversations.GetMessagesAsync(_conversation.ConversationId, watermark, cancellationToken);

foreach (var message in messages.Messages)

{

Received?.Invoke(this, new MessageEvent(message.Text, message.Created, message.FromProperty));

}

watermark = messages.Watermark;

}

}

public void Dispose()

{

_client.Dispose();

}

}The method for sending posts a single message to the conversation. The messages within the conversation (including the ones we've send) can be obtained via the GetMessagesAsync method. Here, we should supply a watermark to only get the messages since the last retrieval (with the watermark). The method we've written receives the messages permanently, essentially resolving to a kind of long polling mechanism. The batches of messages we've got are then delivered in form of events.

Bing Speech-To-Text

A really simple way to include speech recognition in a C# application is the Bing Speech-To-Text API. Again, we can use a library that is freely available on NuGet. We use the Microsoft.ProjectOxford.SpeechRecognition-x64 package to create a VoiceChannel class for allowing microphone input.

The beauty of this library is within its simplicity. All we need to do is create a new client using the SpeechRecognitionServiceFactory factory. In our case we want the Bing Speech-To-Text service also to make use of our defined model (currently residing in LUIS). We therefore go with the CreateMicrophoneClientWithIntent method. Besides the obvious Bing subscription we also need to supply our LUIS application and subscription ID. The rest is done internally.

The library even takes away the task of finding and controlling the microphone. The recording and everything is directly integrated. A couple of events make the library smooth and easy to work with.

The following code illustrates the basic usage.

sealed class VoiceChannel : IDisposable

{

private static String SpeechLocale = ConfigurationManager.AppSettings["SpeechLocale"];

private static String LuisApplicationId = ConfigurationManager.AppSettings["LuisApplicationId"];

private static String LuisSubscriptionId = ConfigurationManager.AppSettings["LuisSubscriptionId"];

private static String BingPrimaryKey = ConfigurationManager.AppSettings["BingPrimaryKey"];

private static String BingSecondaryKey = ConfigurationManager.AppSettings["BingSecondaryKey"];

private readonly MicrophoneRecognitionClientWithIntent _mic;

private Boolean _recording;

public event EventHandler<IntentEvent> ReceivedIntent

{

add { _mic.OnIntent += (sender, ev) => value.Invoke(sender, new IntentEvent(ev.Payload)); }

remove { _mic.OnIntent -= (sender, ev) => value.Invoke(sender, new IntentEvent(ev.Payload)); }

}

public VoiceChannel()

{

_recording = false;

_mic = SpeechRecognitionServiceFactory.CreateMicrophoneClientWithIntent(

SpeechLocale,

BingPrimaryKey,

BingSecondaryKey,

LuisApplicationId,

LuisSubscriptionId);

_mic.OnResponseReceived += OnResponseReceived;

_mic.OnMicrophoneStatus += OnMicrophoneStatus;

}

public Boolean IsRecording

{

get { return _recording; }

}

public void ToggleRecording()

{

if (_recording)

{

_mic.EndMicAndRecognition();

}

else

{

_mic.StartMicAndRecognition();

}

}

private void OnMicrophoneStatus(Object sender, MicrophoneEventArgs e)

{

_recording = e.Recording;

}

private void OnResponseReceived(Object sender, SpeechResponseEventArgs e)

{

foreach (var phrase in e.PhraseResponse?.Results ?? Enumerable.Empty<RecognizedPhrase>())

{

Debug.WriteLine(phrase.DisplayText);

}

}

public void Dispose()

{

_mic.Dispose();

}

}With the speech recognition available we can wire everything together easily. In the end the UI can be as simple as having a button to start and end the speech recognition. A message dialog shows all the performed questions and answers.

Potentially, we may also want to have some speech output. This can be integrated quite easily using the System.Speech library (should be deployed with Windows 8+ and .NET 4.5).

Synthesize Text-To-Speech

Using the available speech synthesizer is a cheap and reliable way to provide some basic audio output. This can be used in many scenarios, mostly for improving accessibility. The topic of accessibility is, of course, not the primary use case for our scenario. We just want to show something cool here and illustrate the possibilities.

The following code implements the possibility of synthesizing a given message. Of course, the class is a more or less useless wrapper, however, please note that the SpeakAsync does not return a Task. In order to know when the message finished we need to check the IsCompleted property of the returned prompt. This logic (checking messages, queueing them etc.) could have been implemented here, but for brevity the implementation was kept simple and minimal.

sealed class SpeechChannel : IDisposable

{

private readonly SpeechSynthesizer _synthesizer;

public SpeechChannel()

{

_synthesizer = new SpeechSynthesizer();

}

public void Dispose()

{

_synthesizer.Dispose();

}

public void Say(String message)

{

_synthesizer.SpeakAsync(message);

}

}The screenshot of the final result is shown below. It is a lightweight WPF application that essentially only contains an item control and a button. That's it. The messages are received continuously.

Configuration Settings

In order to use the speech client we still need to enter some keys and addresses. All the configuration is performed in the app.config of the provided source code.

The following snippet illustrates the fields that need to be filled out.

<?xml version="1.0" encoding="utf-8"?>

<configuration>

<appSettings>

<add key="LuisApplicationId" value="/* Paste Your LUIS Application ID here */" />

<add key="LuisSubscriptionId" value="/* Paste Your LUIS Subscription ID here */" />

<add key="SpeechLocale" value="en-US" />

<add key="BingPrimaryKey" value="/* Paste Your Bing Speech-To-Text Primary Key ID here */" />

<add key="BingSecondaryKey" value="/* Paste Your Bing Speech-To-Text Secondary Key ID here */" />

<add key="DirectLineSecret" value="/* Paste Your Direct Line Secret here */" />

<add key="SmartBotEndpoint" value="/* Paste Your Chat Bot API Endpoint here */" />

</appSettings>

<!-- ... -->

</configuration>Besides the LUIS subscription to give the Bing Speech-To-Text more information and a model behind its resolution, we also need to have a Bing Speech-To-Text subscription. This is currently available as a preview. On the webpage we can get access to the primary and secondary key. Similarly, we require a the secret for the direct line connection. This ensures our client is qualified to talk our bot adapter.

Points of Interest

The system is quite flexible and can be easily used to integrated other home automation facilities. Personally, I've also added monitoring for some server infrastructure and multimedia offerings. The times where I've been dependent on having some app for a platform to control it are over. I just need to have one or the other messanger to have my chat bot in sight. Then I can simply write messages like "stop playing current movie" or "turn the volume up by 5%". With the system as proposed it is fairly simple and straight forward to add new intents.

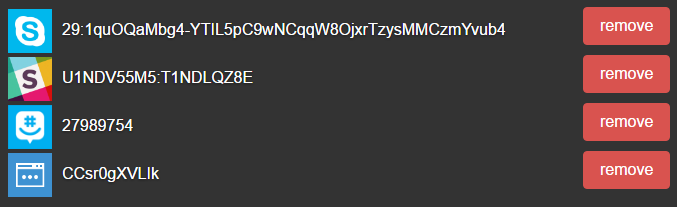

Right now I have 3 channels permanently connected to my bot. The fourth channel is the web chat, which is shown on the following screenshot for completeness.

This way it is possible to use the chat bot via multiple channels, e.g., Skype, Slack, GroupMe. Of course, all three are distinct, however, as an extension we could certainly store the conversation specific logic on the local chat bot client. This way we could easily break the conversation boundaries and allow, e.g., answering a question in one client with information from the other. At the moment, however, the effort and potential confusion for the user seem to make no statement in favor of this possibility. I'll reevaluate this one in the future.

A short GIF video illustrates the basic interaction with the Bot via Skype.

References

This is a list of references going into more details of different topics mentioned in the article.